Why traditional FinOps breaks with AI workloads: a story of costs, tokens, and fat tails

- Jean Latiere

- Dec 9, 2025

- 6 min read

Updated: Jan 2

What you’ll learn: Why traditional cloud cost management breaks down with AI workloads and what shifts are required to regain control.

Part of the FinOps for AI series. → Start here: The Dawn of Agentic FinOps

The promise versus the reality

Your FinOps team reduced EC2 spend by 15% last quarter. Reserved instances contributed another 25% in savings. Reporting was clear, predictable, and the finance team was satisfied.

Then you deployed an AI-powered customer support chatbot.

Three months later, AI costs increased by 200% month-over-month. The dashboards show the spending but do not explain the underlying drivers. Finance asks for clarity, engineering checks the logs, and no one can agree on the root cause.

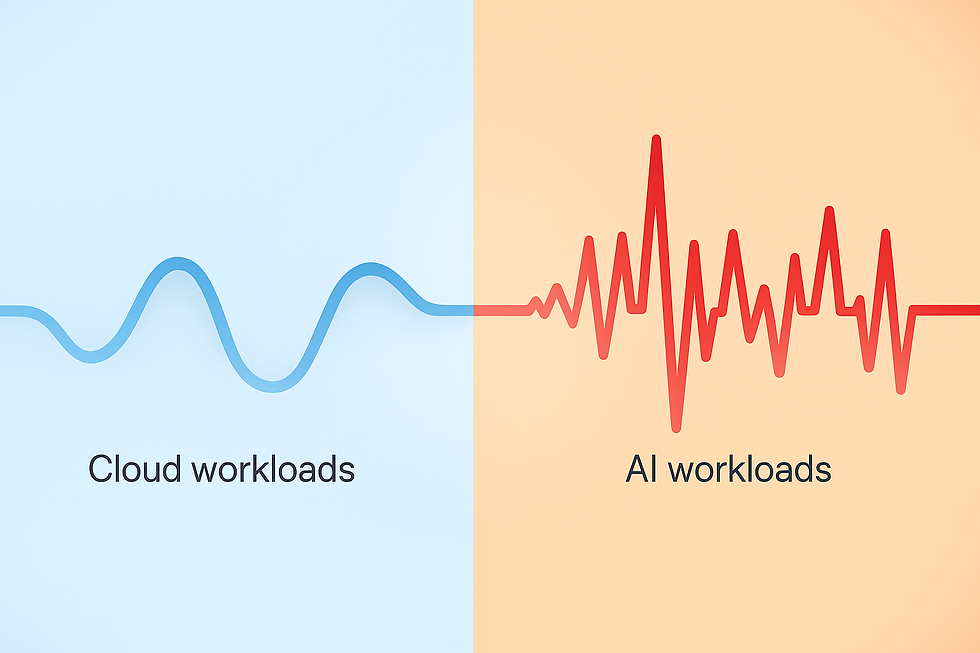

This is not a failure of FinOps as a discipline. It is a structural mismatch. Traditional FinOps was built for predictable infrastructure workloads. AI workloads operate differently. They generate cost patterns that do not resemble EC2, S3, or network transfer. The tools and processes that have served cloud teams for a decade do not provide the visibility required for tokens, embeddings, or inference activity.

Organizations must now adapt their FinOps practice to the economics of AI, or accept that AI costs will behave unpredictably and often without clear attribution.

Why AI cost signals behave differently

Traditional cloud workloads emit slow-moving and predictable cost signals. Compute operates on vCPU hours, storage on GB-months, and network activity on predictable transfer patterns. A single instance type, such as an m5.xlarge at $0.192 per hour, produces a stable monthly cost that can be forecasted with reasonable confidence. Even optimization work happens at this same cadence: rightsizing exercises, lifecycle policies, or reserved instance commitments.

AI workloads do not follow this logic. They generate cost signals such as tokens processed, inference calls, and GPU-seconds for training or fine-tuning. Each API call has an immediate financial impact. This makes AI workloads behave more like a real-time billing meter than a capacity plan.

The volatility is also different. With AWS EC2, your cost is known the moment you launch an instance. With AI, the actual cost emerges only when real users interact with the model. A chatbot may be expected to consume 1 million input tokens and 500,000 output tokens per month, yet actual usage can multiply easily if users write longer queries, the model generates longer responses, or the system retains conversation history. A feature estimated at $1,600 per month can end up costing $8,800 without any infrastructure change. The variance comes entirely from user behavior and model design.

AI costs behave more like surge pricing than EC2 billing. Most interactions are cheap. Some are expensive. They occur often enough that they shape the monthly bill far more than infrastructure teams expect.

A real example: the misconfigured support bot

This is a common story.

A mid-market SaaS company deployed an AI support bot. In the first month, the numbers looked reasonable. The bot used a 3,500-token system prompt, customers asked short questions, and responses averaged around 1,000 tokens. Each conversation consumed roughly 5,000 tokens, at a cost of about two and a half cents. With 10,000 conversations per month, the total cost was $250. Everyone considered this a success.

In the third month, customer adoption increased. Engineering also made improvements: a retry mechanism (three attempts per failed call), deeper context windows (five previous exchanges), and a richer system prompt to increase accuracy.

Token consumption rose to 25,000 tokens per conversation. Monthly volume increased to 50,000 conversations. The new cost was $6,250.

Unfortunately, the change was detected only when the monthly bill arrived, six weeks after the increase began. During that period the company spent more than $37,000 in unmonitored AI usage. No one could determine which workflow caused the spike, because no metadata had been captured at request time.

This is where traditional FinOps fails: monthly billing cycles cannot govern real-time COGS.

Even detailed Cost Explorer views cannot explain which prompts, users, or features created the increase. Without visibility at the request level, attribution becomes guesswork.

The FinOps sequence must invert for AI

Traditional FinOps follows a sequence that has worked for a decade: reporting first, allocation second, and optimization last. This retrospective approach relies on the fact that infrastructure costs accumulate slowly and predictably. AI reverses this logic.

Allocation must happen first, before the cost is created.

Every request should be enriched with metadata such as the user or session identifier, the feature invoked, the model used, and the version of the prompt template. Without these elements, it is impossible to understand cost drivers after the fact.

The bill will show total spend on a model like Claude or an AWS Bedrock service, but not the workflows that generated it.

This requirement introduces a new architectural component: the proxy layer. A proxy, gateway, or LLM-aware middleware sits between your application and the AI provider. It attaches metadata to each request before it is executed. Some companies use open-source tools such as OpenLLMetry. Others rely on API gateways like Kong or NGINX. Cloud providers are beginning to offer native features: AWS Bedrock inference profiles allow basic tagging.

The core idea is the same: AI cost allocation cannot be retrofitted after the bill is generated. It must occur at the moment of invocation.

Ingestion must be real-time.

Cost Explorer updates may lag by 24 to 48 hours. This is acceptable for EC2 or S3, but not for workloads where a single misconfigured prompt can create thousands of dollars of spend within hours.

Token counts should be captured as soon as the model returns a response, streamed into your observability platform, and monitored continuously.

Reporting must be continuous, not monthly.

Teams need to see costs accumulate in near real-time and understand which user actions or deployments are responsible. AI workloads require dashboards that behave more like application performance monitoring than traditional cloud cost reporting.

This inverted sequence -allocation first, ingestion real-time, reporting continuous- is at the heart of AI-ready FinOps.

The business case: why Unit Economics matter

Infrastructure metrics such as cost per instance or cost per region are useful for traditional cloud forecasting, but they do not explain the relationship between AI usage and business value. AI introduces direct, usage-based COGS, which makes unit economics the central measure.

Consider again the customer support bot. A typical conversation costs around 2 cents. Infrastructure overhead -such as API Gateway, Lambda, or the vector database used for retrieval- adds a few 1/1000s of a dollar. The total cost per conversation is roughly $0.02.

A human agent handling a support ticket costs around $18 per interaction. The difference is stark: each automated conversation generates a saving of approximately $17.98.

One automated conversation pays for nearly 900 additional AI interactions. These numbers make the business impact visible. They also help determine where AI is appropriate, where a human should intervene, and which workflows are worth optimizing.

Traditional dashboards can show that $45,000 was spent on a model last month. They do not explain which feature produced that cost or whether the spending created value. Unit economics closes this gap by linking spend to specific outcomes -cost per conversation, cost per task, cost per search, or cost per generated document. Once this link exists, prioritisation becomes straightforward.

Key takeaways

AI workloads behave differently from traditional cloud workloads. They generate cost signals in real time, at high variance, and with direct impact on margins. The classic FinOps sequence -reporting, allocation, optimisation - cannot govern this behaviour.

Regaining control requires a shift in practice. Cost attribution must occur before the cost exists, which introduces the need for proxy infrastructure. Cost ingestion must occur in real time. Reporting must move from a monthly cadence to continuous visibility. Most importantly, AI spending must be interpreted through business-level unit economics rather than infrastructure-level metrics.

The result is a FinOps practice that is accurate, predictable, and aligned with how AI workloads actually operate.

Is your FinOps practice ready for AI workloads?

Traditional tools often assume predictable usage and monthly billing cycles. AI workloads require a different approach. To help organisations understand their current readiness, OptimNow provides an AI Cost Readiness Assessment. It evaluates the core dimensions of AI cost management, from real-time visibility and allocation practices to unit economics and ROI tracking.

Connect to the AI Cost Readiness Assessment to identify your current state and get a strategic roadmap.

.png)